Installation

Prototype: OmniVision was exhibited at Gallery II at the

School of Art and Design, San Jose State University, San Jose CA. It

ran from November 1 to Nov 5, 2004.

"It looks very medieval." -Joel Slayton

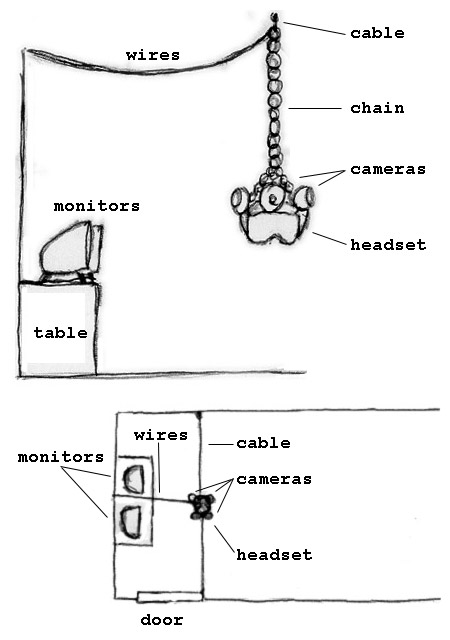

The headset was suspended from a chain attached to a wire cable

track that traversed the width of the gallery space. Participants

could move back and forth along the length of the cable. However,

participants were prevented from moving perpendicular to the cable,

across the length of the gallery. This was implemented due to

concerns of interference with the other exhibitions. Two monitors

were displayed side by side. The left monitor displayed the current

users view. The right monitor displayed the video documentation of

the Prototype Test Experiments.

The Omni software ran on a 3ghz PC located under the table. The

feeds from the four Logitec Quickcam Pro 400 cameras ran into the PC

which processed the information and sent a video feed back out to

the Virtual IO headset display. The experiment videos ran off of a

MacG4 also located under the table.

Technical

The Prototype: OmniVision software was written in Max/MSP

with Jitter and Tap Tools. The main file, omni is responsible for

taking in the streaming video from the camera feeds and sending them

to the sub-processes. It then displays the resulting video stream

and records image sequence documentation based on motion.

The pkeycolor sub-posses file simply sets up an easy way to select

the (background) color that is removed and replaced with

transparency on the fly.

The pkeybalance file does all the comparison computations. It takes

in two video sources and a key color (the background color replaced

with transparency). A motion index is a floating point number

between 0 and 1 generated by comparing the current frame of a video

feed to the previous frames and taking an average. (0 meaning no

change) The motion indexes of the two feeds are compared. One feed

is keyed into the background of the other based on the motion index.

The higher the index the more is bleeds into the foreground. When

the motion on a feed is so great that the motion index reaches 1 and

the background totally usurps the foreground then pkeybalance

switches the ordering of the layers. The previous background feed is

now the foreground and vice versa.

Omni calls pkeybalace three times, two feed pairs are compared and

layered and the resulting two feeds are then compared and layered in

the same way. In this way the amount that the feeds cover one

another as well as the order in which the feeds are layered is in

constant flux. Thus the directional views are combined and displayed

such that the user witnesses a collage of his or her surroundings.

The current directional video feed with the most motion always being

the topmost layer.

Max/MSP is a visual object oriented programming application written

by Miller Puckette. Currently Miller is developing a related

freeware called PD. Max/MSP is primarily designed for audio

processing. Jitter is an added module for video. Tap Tools is an

added library with a method for video motion index calculation.